regularization machine learning example

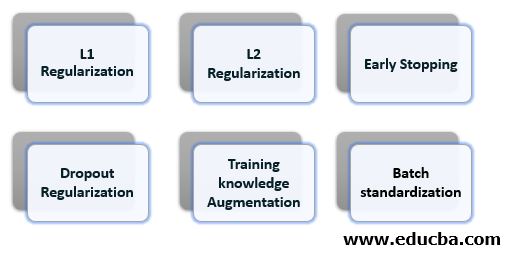

10 Best Uses Of Photoshop In Real World. Early stopping that is limiting the number of training steps or the learning rate.

Regularization In Machine Learning And Deep Learning By Amod Kolwalkar Analytics Vidhya Medium

Matrix is typically huge very sparse and most of values are missing.

. L 2 regularization. Feel free to ask doubts in the comment section. Uses Of Raspberry Pi.

Click here to see solutions for all Machine Learning Coursera Assignments. Because learning is a prediction problem the goal is not to find a function that most closely fits the previously observed data but to find one that will most accurately predict output from future input. This has been a guide to Uses of Machine learning in the real world.

One example of regularization is Tikhonov. Note that z is also referred to as the log-odds because the inverse of the sigmoid states that z can be defined as the log of the probability of the 1 label eg dog barks divided by the probability of the 0 label eg dog doesnt bark. Supervised learning is the types of machine learning in which machines are trained using well labelled training data and on basis of that data machines predict the output.

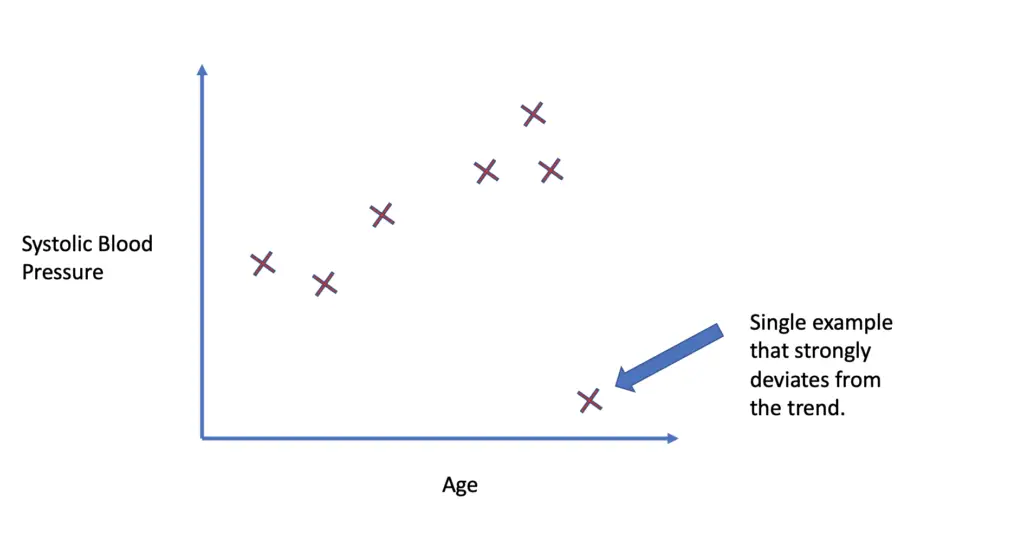

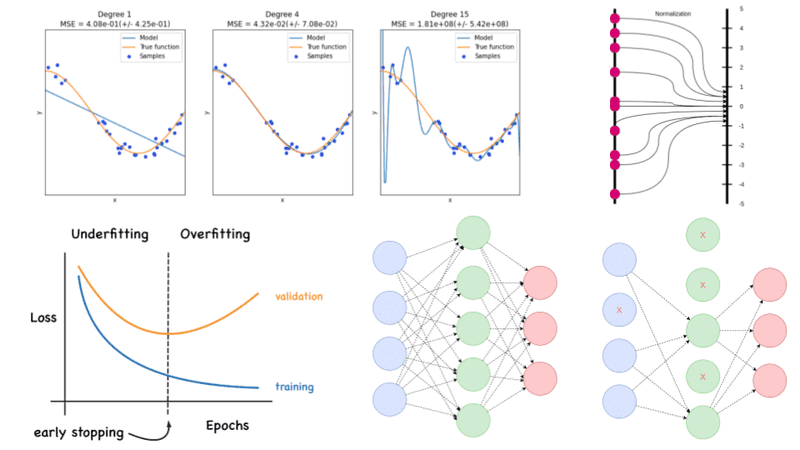

Usually ML libraries pre-set a learning rate for example in TensorFlow it is 005. For example line plots of the loss that we seek to minimize of the model on train and validation datasets will show a line for the training dataset that drops and may plateau and a line for the validation dataset that drops at first then at some point begins to rise again. Unfortunately precision and recall are often in.

Could you please introduce meif there is any machine learning model such as Multivariated Adaptive Regression Spline MARS which has an ability to select a few number of. The course uses the open-source programming language Octave instead of Python or R for the assignments. These are critical to the performance of machine learning methods as.

Implicit regularization is all other forms of regularization. Click here to see more codes for Raspberry Pi 3 and similar Family. B Feature F1 is an example of ordinal variable.

Machine Learning is generally categorized into three types. The commonly used regularization techniques are. In supervised learning the machine experiences the examples along with the labels or targets for each example.

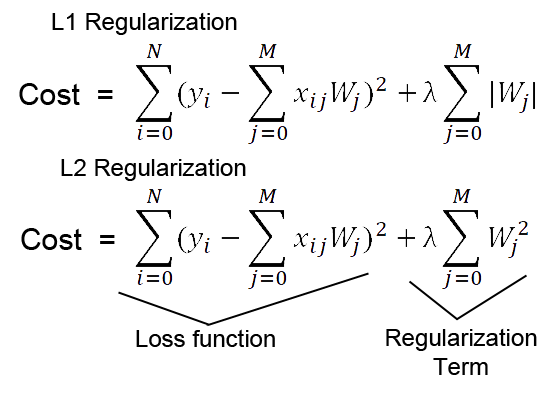

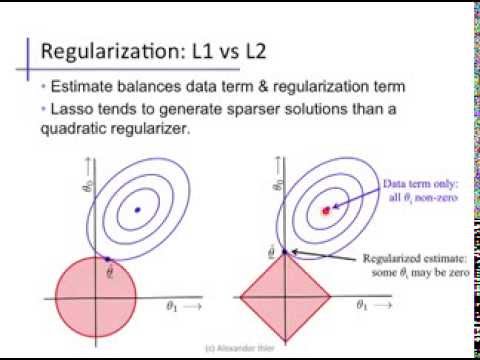

Questions solutions on SVM decision trees random forest more. Update the grid search example to grid search within the best-performing order of magnitude of parameter values. For example L 2 regularization relies on a prior belief that weights should be small and normally distributed around zero.

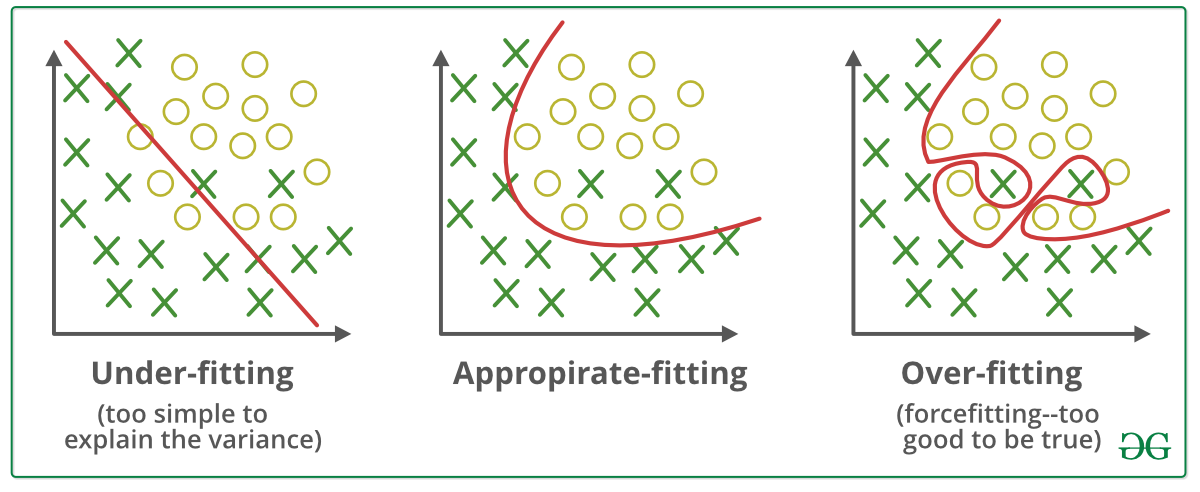

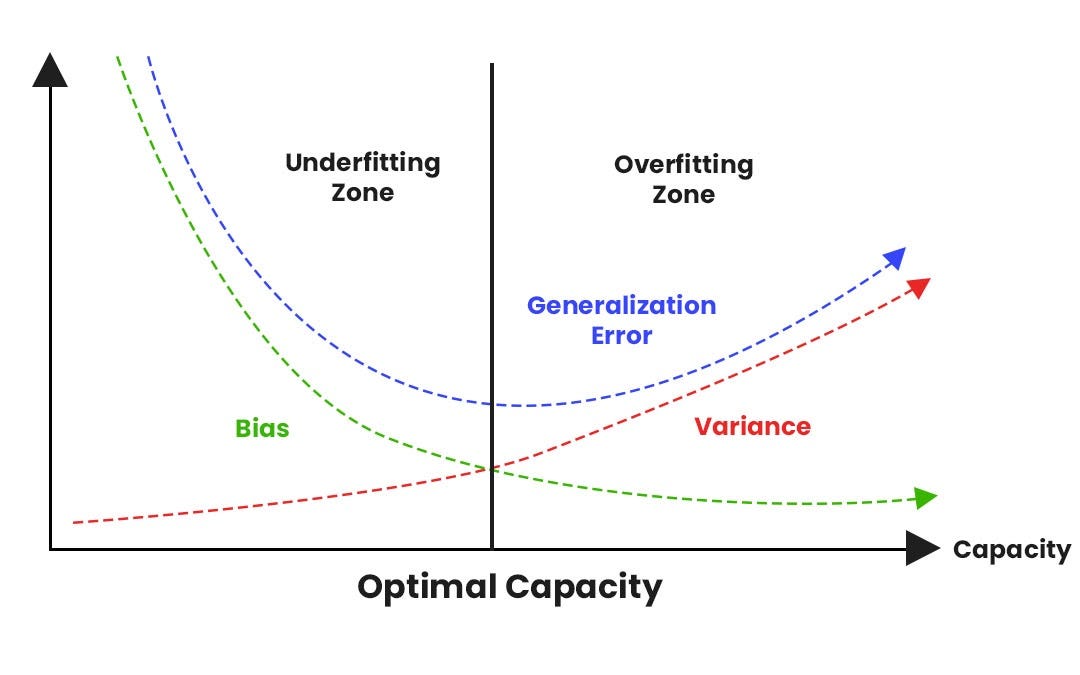

To fully evaluate the effectiveness of a model you must examine both precision and recall. The most common type of embedded feature selection methods are regularization methods. The regularization procedures discussed below which are machine learnings primary defense against overfitting rely on a choice of hyperparameters or synonymously tuning parameters.

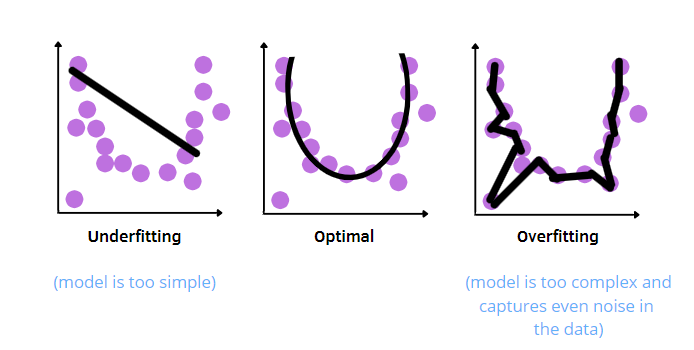

Regularization is a technique used to reduce the errors by fitting the function appropriately on the given training set and avoid overfitting. If you dont specify a regularization function the model will become. 1E-6 1E-5 etc and see if it results in a better performing model on the test set.

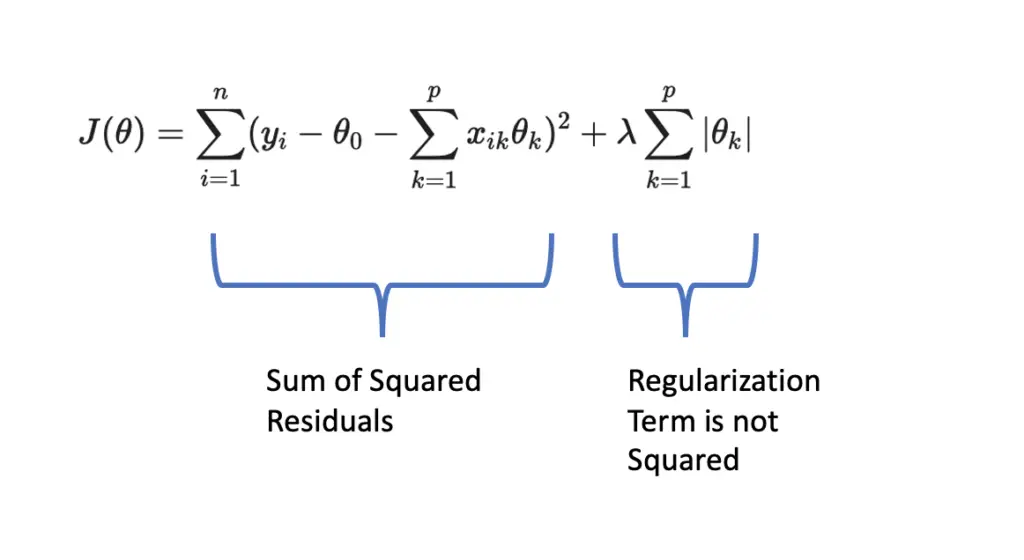

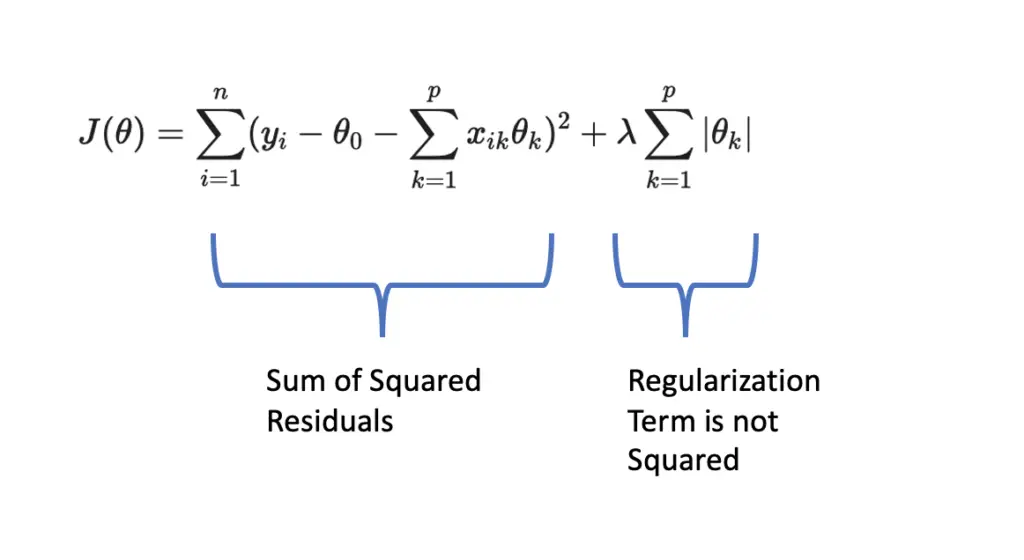

Regularization terms are modifications of a loss function to penalize complex models eg. An example if a wrapper method is the recursive feature elimination algorithm. Elastic net regularization is commonly used in practice and is implemented in many.

Linear Regression with One Variable. The cells of the table are the number of predictions made by a machine learning algorithm. A Tug of War.

Repeated Regularization of Model. In machine learning problems a major problem that arises is that of overfitting. Click here to see more codes for NodeMCU ESP8266 and similar Family.

Where C is the regularization parameter and w1 w2 are the coefficients of x1 and x2. However it might not be the best learning rate for your model. Regularization is a set of techniques that can prevent overfitting in neural networks and thus improve the accuracy of a Deep Learning model when facing completely new data from the problem domain.

For example a machine learning model that evaluates email messages and outputs either spam or not spam is a binary classifier. This beginners course is taught and created by Andrew Ng a Stanford professor co-founder of Google Brain co-founder of Coursera and the VP that grew Baidus AI team to thousands of scientists. Exercises are done on Matlab R2017a.

Course Schedule Week 1. Our training optimization algorithm is now a function of two terms. Entropy regularization is used when the output of the model is a probability distribution for example classification policy gradient reinforcement learning etc.

Create a new example to continue the training of a fit model with increasing levels of regularization eg. Our model has a recall of 011in other words it correctly identifies 11 of all malignant tumors. The loss term which measures how well the model fits the data and the regularization term which measures model complexity.

This is the course for which all other machine learning courses are judged. This includes for example early stopping using a robust loss function and discarding outliers. This repository consists my personal solutions to the programming assignments of Andrew Ngs Machine Learning course on Coursera.

To result in a simpler and often bettermore. Overfitting is a phenomenon that occurs when a Machine Learning model is constraint to training set and not able to perform well on unseen data. Chapter 7 Regularization for Deep Learning Deep Learning 2016.

In this article we will address the most popular regularization techniques which are called L1 L2 and dropout. The task of machine learning is to learn a function that predicts utility of items to each user. I will try my best to.

The x values are the feature values for a particular example. Unlike parameters hyperparameters are specified by the practitioner when. So the best option is to set it manually between 00001 and 10 and play with it seeing what gives you the best loss without taking hours to train.

For example a machine learning algorithm can predict 0 or 1 and each prediction may actually have been a 0 or 1. Well discuss a third strategyL 1 regularizationin a later module Imagine that you assign a unique id to each example and map each id to its own feature. Click here to see more codes for Arduino Mega ATMega 2560 and similar Family.

An example is a collection of features. Machine Learning Crash Course focuses on two common and somewhat related ways to think of model complexity. Feature F1 is an example of nominal variable.

Hyperparameters are different from parameters which are the internal coefficients or weights for a model found by the learning algorithm. Here we have discussed Introduction to Machine learning along with the top 10 popular uses of Machine learning in detail. Instead of directly using the norm of the weights in the loss term the entropy regularizer includes the entropy of the output distribution scaled by lambda.

You may also look at the following article to learn more Uses of Angular JS. Supervised Learning Unsupervised Learning Reinforcement learning. Test your knowledge on Machine Learning Concepts through this skilltest.

Machine learning algorithms have hyperparameters that allow you to tailor the behavior of the algorithm to your specific dataset.

Regularization In Machine Learning Simplilearn

Regularization In Machine Learning Programmathically

L1 Vs L2 Regularization The Intuitive Difference By Dhaval Taunk Analytics Vidhya Medium

Regularization In Machine Learning Simplilearn

Linear Regression 6 Regularization Youtube

Regularization Techniques For Training Deep Neural Networks Ai Summer

Intuitive And Visual Explanation On The Differences Between L1 And L2 Regularization

Regularization Understanding L1 And L2 Regularization For Deep Learning By Ujwal Tewari Analytics Vidhya Medium

Regularization In Machine Learning Regularization In Java Edureka

Regularization Part 1 Ridge L2 Regression Youtube

Regularization In Machine Learning Programmathically

L1 And L2 Regularization Youtube

Regularization Machine Learning Know Type Of Regularization Technique

Which Number Of Regularization Parameter Lambda To Select Intro To Machine Learning 2018 Deep Learning Course Forums

A Comprehensive Guide Of Regularization Techniques In Deep Learning By Eugenia Anello Towards Data Science

Regularization In Machine Learning Connect The Dots By Vamsi Chekka Towards Data Science

L2 Vs L1 Regularization In Machine Learning Ridge And Lasso Regularization